Posts tagged haskell

Formatting serial streams in hardware

12 August 2024 (programming haskell clash fpga)I've been playing around with building a Sudoku solver circuit on an FPGA: you connect to it via a serial port, send it a Sudoku grid with some unknown cells, and after solving it, you get back the solved (fully filled-in) grid. I wanted the output to be nicely human-readable, for example for a 3,3-Sudoku (i.e. the usual Sudoku size where the grid is made up of a 3 ⨯ 3 matrix of 3 ⨯ 3 boxes):

4 2 1 9 5 8 6 3 7 8 7 3 6 2 1 9 5 4 5 9 6 4 7 3 2 1 8 3 1 2 8 4 6 7 9 5 7 6 8 5 1 9 3 4 2 9 4 5 7 3 2 8 6 1 2 8 9 1 6 4 5 7 3 1 3 7 2 9 5 4 8 6 6 5 4 3 8 7 1 2 9

This post is about how I structured the stream transformer that produces all the right spaces and newlines, yielding a clash-protocols based circuit.

Continue reading »Getting my HomeLab-2 sea legs

21 October 2023 (homelab programming retrochallenge retrochallenge2023 retro haskell)Previously, we left off our HomeLab-2 game jam story with two somewhat working emulators, and a creeping realization that we still haven't written a single line of code.

Actually, it was a bit worse than that. My initial "plan" was to "participate in the HomeLab-2 game jam", with nothing about pesky details such as:

- What game do I want to make?

- What technology will I use?

- How will I find time to do it, given that I'm spending all of September back home in Hungary?

I found the answers to these questions in reverse order. First of all, since for three of the five weeks I've spent in Hungary, I was working from home instead of being on leave, we didn't really have much planned for those days so the afternoons were mostly free.

Because the HomeLab-2 is so weak in its processing power (what with its Z80 only doing useful work in less than 20% of the time if you want to have video output), and also because I have never ever done any assembly programming, I decided now or never: I will go full assembly. Perhaps unsurprisingly, perhaps as a parody of myself, I found a way to use Haskell as my Z80 assembler of choice.

This left me with the question of what game to do. Coming up with a completely original concept was out of the quesiton simply because I lack both the game designing experience as well as ideas. Also, if there's one thing I've learnt from the Haskell Tiny Games Jam, it is that it's better to crank out multiple poor quality entries (and improve in the process) than it is to aim for the stars (a.k.a. that pottery class story that is hard to find an authoritative origin for). Another constraint was that neither of my emulators supported raster graphics, and I was worried that even if they did, it would be too slow on real hardware; so I wanted to come up with games that would work well with character graphics.

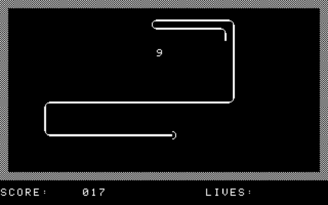

After a half-hearted attempt at Tetris (which I stopped working on when someone else has already submitted a Tetris implementation), the first game I actually finished was Snake. For testing, I just hacked my emulator so that on boot, it loads the game to its fixed stating address, then used CALL from HomeLab BASIC to start it. This was much more convenient than loading from WAV files; doubly so because it took me a while to figure out how exactly to generate a valid WAV file. For the release version, I ended up going via an HTP file (a byte-level representation of the cassette tape contents) which is used by some of the pre-existing emulators. There's an HTP to WAV converter completing the pipeline.

There's not much to say my Snake. I tried to give it a bit of an arcade machine flair, with an animated attract screen and some simple screen transitions between levels. One of the latter was inspired by Wolfenstein 3D's death transition effect: since the textual video mode has 40×25 characters, a 10-bit maximal LFSR can be used as a computationally cheap way of replacing every character in a seemingly random (yet full-screen-covering) way.

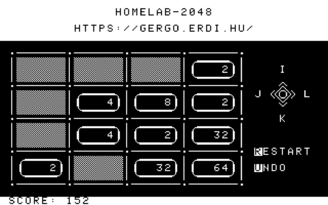

For my second entry, I went with 2048. Shortly after finishing Snake, thanks to Gábor Képes I had the opportunity to try a real HomeLab-2 machine. Feeling how unresponsive the original keyboard is convinced me that real-time games are not the way to go.

The challenge with a 2048-like game on a platform like this is that you have to update a large portion of the screen as the tiles slide around. Having an assembler that is an EDSL made it a breeze to try various speedcoding techniques, but with lots of tiles sliding around, I just couldn't get it to fit within the frame budget, which led to annoying flickering as a half-finished frame would get redrawn before the video refreshing interrupt handler eventually returned to finish the rest of the frame. So I ended up using double buffering, which technically makes each frame longer to draw, but avoids inconsistent visible frames.

Since the original 2048 is a product of modern times, I decided to pair my implementation with a more clean design: just like a phone app, it boots straight into the game with no intro screen, with all controls shown right on the main screen.

Between these two games, and all the other fun stuff one doesn when visiting home for a month, September flew by. As October approached, I stumbled upon this year's RetroChallenge announcement and realized the potential for synergy between doing something cool in a last hurrah before the HomeLab-2 game jam deadline and also blogging about it for RetroChallenge. But this meant less than two weeks to produce a magnum opus. Which is why this blogpost series became retrospective — there was no way to finish my third game on time while also writing about it.

But what even was that third and final game idea? Let's find out in the next post.

A small benchmark for functional languages targeting web browsers

2 July 2022 (programming haskell idris javascript)I had an idea for a retro-gaming project that will require a MOS 6502 emulator that runs smoothly in the browser and can be customized easily. Because I only need the most basic of functionality from the emulation (I don't need to support interrupts, timing accuracy, or even the notion of cycles), I thought I'd just quickly write one. This post is not about the actual retro-gaming project that prompted this, but instead, my experience with the performance of the generated code using various functional-for-web languages.

As I usually do in situations like this, I started with a Haskell implementation to serve as a kind of executable specification, to make sure my understanding of the details of various 6502 instructions is correct. This Haskell implementation is nothing fancy: the outside world is modelled as a class MonadIO m => MonadMachine m, and the CPU itself runs in MonadMachine m => ReaderT CPU m, using IORefs in the CPU record for registers.

The languages

Ironing out all the wrinkles took a whole day, but once it worked well enough, it was time for the next step: rewriting it in a language that can then target the browser. PureScript seemed like an obvious choice: it's used a lot in the real world so it should be mature enough, and with how simple my Haskell code is, PureScript's idiosyncracies compared to Haskell shouldn't really come into play beyond the syntax level. The one thing that annoyed me to no end was that numeric literals are not overloaded, so all Word8s in my code had to be manually fromIntegral'd; and, in an emulator of an eight-bit CPU, there's a ton of Word8 literals...

The second contender was Idris 2. I've had good experience with Idris 1 for the web when I wrote the ICFP Bingo web app, but that project was all about the DOM manipulation and no computation. I was curious what performance I can get from Idris 2's JavaScript backend.

And then I had to include Asterius, a GHC-based compiler emitting WebAssembly. Its GitHub page states it is "actively maintained by Tweag I/O", but it's actually in quite a rough shape: the documentation on how to build it is out of date, so the only way to try it is via a 20G Docker container...

Notably missing from this list is GHCJS. Unfortunately, I couldn't find an up-to-date version of it; it seems the project, or at least work on integrating with standard Haskell tools like Stack, has died off.

To compare performances, I load the same memory image into the various emulators, set the program counter to the same starting point, and run it for 4142 instructions until a certain target instruction is reached. To paper over the browser's JavaScript JIT engine etc., each test runs for 100 times first as a warm-up, then 100 times measured.

Beside the PureScript, Idris 2, and GHC/Asterius implementations, I have also added a fourth version to serve as the baseline: vanilla JavaScript. Of course, I tried to make it as close to the functional versions as possible; I hope what I wrote is close to what could reasonably be expected as the output of a compiler.

Performance results

The following numbers come from the collected implementations in this GitHub repo. The PureScript and Idris 2 versions have been improved based on ideas from the respective Discord channels. For PureScript, using the CPS-transformed version of Reader helped; and in the case of Idris 2, Stefan Höck's changes of arguments instead of ReaderT, and using PrimIO when looping over instructions, improved performance dramatically.

| Implementation | Generated code size (bytes) | Average time of 4142 instructions (ms) |

| JavaScript | 12,877 | 0.98 |

| ReasonML/ReScript | 27,252 | 1.77 |

| Idris 2 | 60,379 | 6.38 |

| Clean | 225,283 | 39.41 |

| PureScript | 151,536 | 137.03 |

| GHC/Asterius | 1,448,826 | 346.73 |

So Idris 2 comes out way ahead of the pack here: unless you're willing to program in JavaScript, it's by far your best bet both for tiny deployment size and superb performance. All that remains to improve is to compile monad transformer stacks better so that the original ReaderT code works as well as the version using implicit parameters

To run the benchmark yourself, checkout the GitHub repo, run make in the top-level directory, and then use a web browser to open _build/index.html and use the JavaScript console to run await measureAll().

Update on 2022-07-08

I've added ReScript (ReasonML for the browser), which comes in as the new functional champion! I still wouldn't want to write this program in ReScript, though, because of the extra pain caused it lacks not only overloaded literals, but even type-driven operator resolution...

Also today, I have received a pull request from Camil Staps that adds a Clean implementation.

Cheap and cheerful microcode compression

2 May 2022 (programming haskell clash fpga retro)This post is about an optimization to the Intel 8080-compatible CPU that I describe in detail in my book Retrocomputing in Clash. It didn't really fit anywhere in the book, and it isn't as closely related to the FPGA design focus of the book, so I thought writing it as a blog post would be a good idea.

Just like the real 8080 from 1974, my Clash implementation is microcoded: the semantics of each machine code instruction of the Intel 8080 is described as a sequence of steps, each step being the machine code instruction of an even simpler, internal micro-CPU. Each of these micro-instruction steps are then executed in exactly one clock cycle each.

My 8080 doesn't faithfully replicate the hardware 8080's micro-CPU; in fact, it doesn't replicate it at all. It is a from-scratch design based on a black box understanding of the 8080's instruction set, and the main goal was to make it easy to understand, instead of making it efficient in terms of FPGA resource usage. Of course, since my micro-CPU is different, the micro-instructions have no one to one correspondence with the orignal Intel 8080, and so the microcode is completely different as well.

In the CPU, after fetching the machine code instruction byte, we look up the microcode for that byte, and then execute it cycle by cycle until we're done. This post is about how to store that microcode efficiently.

Continue reading »A tiny computer for Tiny BASIC

17 November 2020 (programming haskell clash fpga retro)Just a quick post to peel back the curtain a bit on a Clash example I posted to Twitter. My tweet in question shows the following snippet, claiming it is "a complete Intel 8080-based FPGA computer that runs Tiny BASIC":

logicBoard

:: (HiddenClockResetEnable dom)

=> Signal dom (Maybe (Unsigned 8)) -> Signal dom Bool -> Signal dom (Maybe (Unsigned 8))

logicBoard inByte outReady = outByte

where

CPUOut{..} = intel8080 CPUIn{..}

interruptRequest = pure False

(dataIn, outByte) = memoryMap _addrOut _dataOut $ do

matchRight $ do

mask 0x0000 $ romFromFile (SNat @0x0800) "_build/intel8080/image.bin"

mask 0x0800 $ ram0 (SNat @0x0800)

mask 0x1000 $ ram0 (SNat @0x1000)

matchLeft $ do

mask 0x10 $ port $ acia inByte outReadyI got almost a hundred likes on this tweet, which isn't too bad for a topic as niche as hardware design with Haskell and Clash. Obviously, the above tweet was meant as a brag, not as a detailed technical description; but given the traction it got, I thought I might as well try to expand a bit on it.

Continue reading »Older posts:

- 15 September 2020: A "very typed" container for representing microcode

- 1 August 2020: Solving text adventure games via symbolic execution

- 7 May 2020: Integrating Verilator and Clash via Cabal

- 30 September 2018: Composable CPU descriptions in CλaSH, and wrap-up of RetroChallenge 2018/09

- 23 September 2018: CPU modeling in CλaSH

- 22 September 2018: Back in the game!

- 15 September 2018: Very high-level simulation of a CλaSH CPU

- 8 September 2018: PS/2 keyboard interface in CλaSH

- 2 September 2018: CλaSH video: VGA signal generator

- 29 August 2018: RetroChallenge 2018: CHIP-8 in CλaSH

- 28 December 2015: Hacks of 2015

- 2 March 2015: Initial version of my Commodore PET

- 5 February 2015: Typed embedding of STLC into Haskell

- 12 July 2014: Arrow's place in the Applicative/Monad hierarchy

- 29 March 2014: My first computer

- 5 October 2013: Yo dawg I heard you like esoteric programming languages...

- 17 February 2013: Write Yourself a Haskell... in Lisp (3 comments)

- 19 January 2013: A Brainfuck CPU in FPGA (3 comments)

- 1 December 2012: Static analysis with Applicatives

- 11 March 2012: Basics for a modular arithmetic type in Agda

- 19 February 2012: Mod-N counters in Agda (6 comments)

- 13 February 2011: Developing iPhone applications in Haskell — a tutorial (5 comments)

- 23 October 2010: The case for compositional type checking (1 comment)

- 7 September 2010: From Register Machines to Brainfuck, part 2

- 6 September 2010: From Register Machines to Brainfuck, part 1

- 23 May 2010: Efficient type-level division for Haskell (1 comment)

- 16 May 2010: Compiling Haskell into C++ template metaprograms

- 19 April 2010: Unary arithmetics is even slower than you'd expect

- 17 October 2008: Generikus programozás (5 comments)